Googleshop.us has consumer electronics, home electronics, fashion, and other products from various vendors.

Amazon is one of the largest online retailers in the world, offering a wide range of products from books to electronics to clothing and more. You can find almost anything you need on Amazon.

Walmart is another popular online store that offers a wide range of products, including groceries, electronics, clothing, and more. They also offer free two-day shipping on many items.

eBay is an online auction and shopping website that allows users to buy and sell a wide range of products, including electronics, clothing, collectibles, and more.

Target: Target is a popular online store that offers a wide range of products, including clothing, electronics, home goods, and more. They also offer free two-day shipping on many items.

Best Buy is a popular online store that specializes in electronics, including computers, TVs, cameras, and more. They also offer free shipping on many items.

Online shopping refers to the process of purchasing goods or services over the internet. Instead of physically visiting a brick-and-mortar store, consumers can browse and buy products through websites or mobile apps. Online shopping has become increasingly popular due to its convenience, accessibility, and the ability to shop from anywhere at any time.

Amazon, Walmart and eBay sell a wide variety of electronics, including laptops, tablets, smartphones, television, Xbox, PlayStation, cameras, headphones, speakers, gaming consoles, and more. They also offer a range of smart home devices such as smart speakers, smart displays, and smart thermostats. Amazon’s own line of electronics includes the Amazon Echo, Fire TV Stick, Kindle e-readers, and Fire tablets.

Google in the News

As of January of 2024, at Google, the minimum wage is about $22 per hour.

Google is a technology company that offers a wide range of products and services. Some of the products offered by Google include:

Google Search: A search engine that allows users to find information on the internet.

Google Maps: A mapping service that provides directions, traffic updates, and other location-based information.

Google Drive: A cloud storage service that allows users to store and share files.

Google Docs: A word processing program that allows users to create and edit documents online.

Google Sheets: A spreadsheet program that allows users to create and edit spreadsheets online.

Google Slides: A presentation program that allows users to create and edit presentations online.

Google Photos: A photo sharing and storage service that allows users to store, organize, and share photos and videos.

Google Translate: A translation service that allows users to translate text and web pages into different languages.

Google Chrome: A web browser that allows users to browse the internet.

Google Calendar: A calendar service that allows users to schedule and manage events.

Google Meet: A video conferencing service that allows users to hold virtual meetings.

Google Assistant: A virtual assistant that can help users with tasks and answer questions.

Google Home: A smart speaker that allows users to control smart home devices and play music.

Google Pixel: A line of smartphones developed by Google.

Google is a multinational technology company that specializes in Internet-related services and products. It was founded in 1998 by Larry Page and Sergey Brin while they were Ph.D. students at Stanford University. Some of Google’s most popular products include Google Search, Google Maps, Google Drive, Google Photos, and YouTube. Google is a fully-automated search engine that uses software known as "web crawlers." Alphabet Inc. is Google. Google has a commercial relationship with Wikipedia.

Platform wedge sandals are a type of footwear that combines the features of both platforms and wedges. Here's a breakdown of the terms:

Platform: Refers to the thick sole under the entire foot, providing elevation. Unlike regular heels, platforms lift both the heel and the toe, creating a more comfortable walking experience.

Wedge: Describes the shape of the heel, which runs under the shoe in a continuous piece, from the back of the shoe to the middle or front.

Combining these elements, platform wedge sandals have a thick, elevated sole with a wedge-shaped heel. They can come in various styles, such as open-toe or closed-toe, and the height of the wedge can vary. These sandals are popular in women's fashion for their ability to provide extra height and elongate the legs while maintaining comfort compared to traditional high heels. They are often worn in casual or semi-formal settings, depending on the design and materials used.

Diversity, Equity, and Inclusion (DEI) at Google

Google has been taking steps to build diversity, equity, and inclusion into everything they do. According to their 2022 Diversity Annual Report, Google achieved their best year yet for hiring and retaining people from underrepresented communities. They also developed a wide variety of new methods to better support every Googler’s growth. In addition, Google invested responsibly in every market they call home, growing how they support community partners around the globe. They also took action to create a more flexible and accessible work culture. Finally, they expanded their efforts to foster a sense of belonging, not just for employees, but for every community they impact.

Google, like many other major companies, has been actively working to hire and promote a diverse workforce, which includes individuals from underrepresented racial and ethnic groups, such as African Americans and Latinos.

Women's open-toe high heels are a type of footwear characterized by a raised heel and an open front, exposing the toes. Here are some key features and variations:

Heel Height: High heels come in various heights, and the term "high heels" generally refers to shoes with taller heels that elevate the wearer's feet significantly. The height can range from a few inches to several inches.

Open Toe: Open-toe high heels have a design that leaves the toes exposed. This can include sandals with a single strap across the toes, peep-toe styles that reveal a portion of the toes, or completely open-front designs.

Style and Design: There are numerous styles of open-toe high heels, including stilettos, wedges, block heels, and more. The design can range from casual to formal, making them suitable for various occasions.

Materials: High heels can be made from various materials, including leather, suede, synthetic materials, and more. The choice of material often influences the overall look and feel of the shoes.

Straps and Details: Some open-toe high heels may have additional straps, embellishments, or decorative details, adding to their aesthetic appeal.

These types of shoes are popular in women's fashion and are often worn for formal events, parties, or when a more polished and sophisticated look is desired. The style and design can vary widely to suit different tastes and outfit choices.

Life at Google

Stories from Googlers, interns and alumni on how they got to Google. Google offers internships in various fields such as software engineering, business, user experience, and more. You can explore all open internships on the Google Careers site. Google interns work across Google, including being part of various teams like software engineering, business, user experience, and more. With internships across the globe, Google offers many opportunities to grow with them and help create products and services used by billions.

Loafers are a style of slip-on shoes that are often characterized by a moccasin-like construction and a low, broad heel. They are known for their comfort, versatility, and the absence of laces or fastenings. Men's loafers come in various styles, and they are popular for both casual and more formal occasions. Here are some common types of men's loafers:

Penny Loafers: These have a distinctive strap across the front with a diamond-shaped slot, traditionally meant for holding a penny. Penny loafers are versatile and can be worn with both casual and semi-formal outfits.

Tassel Loafers: Tassel loafers are characterized by decorative tassels on the front. These loafers often have a more sophisticated and dressy appearance, making them suitable for business casual or formal settings.

Bit Loafers: These loafers feature a metal ornament, usually in the shape of a horsebit, on the top of the shoe. Bit loafers have a slightly more formal look and are often worn in business or dressy casual situations.

Driving Loafers: Designed for comfort, driving loafers often have a softer sole and a more casual appearance. They are well-suited for driving but are also popular for everyday casual wear.

Venetian Loafers: Venetian loafers are simple and sleek, lacking any ornamentation or visible seams on the upper part of the shoe. They are known for their clean and minimalist design.

Loafers can be made from various materials, including leather, suede, and synthetic materials. The color and material choice can significantly impact the formality of the loafers. Whether for a casual day out, business casual attire, or a more formal event, loafers provide a stylish and comfortable option for men's footwear.

Google AI

Gemini is a family of multimodal large language models developed by Google DeepMind and it is a contender to OpenAI's GPT-4. Artificial Intelligence (AI) is the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings. AI is a rapidly growing field that has the potential to revolutionize the way we live and work. Companies like Google and Microsoft are investing heavily in AI research and development. AI is being used in a wide range of applications, including natural language processing, image recognition, robotics, and more.

"AI" stands for Artificial Intelligence. Artificial Intelligence refers to the development of computer systems that can perform tasks that typically require human intelligence. These tasks include problem-solving, learning, planning, natural language understanding, and perception.

There are two main types of AI:

Narrow or Weak AI: This type of AI is designed to perform a specific task. It operates under a limited set of pre-defined conditions and doesn't possess general intelligence. Examples include virtual personal assistants like Siri and Alexa.

General or Strong AI: This type of AI is hypothetical and would have the ability to understand, learn, and apply knowledge across different domains, similar to human intelligence. Currently, we have narrow AI, and achieving general AI remains a goal for the future.

AI is applied in various fields, including:

Machine Learning: A subset of AI that focuses on developing algorithms that enable computers to learn from data.

Natural Language Processing (NLP): Enables computers to understand, interpret, and generate human language.

Computer Vision: Involves teaching computers to interpret and make decisions based on visual data, such as images or videos.

Robotics: AI is used to enhance the capabilities of robots, allowing them to perform tasks in diverse environments.

Healthcare: AI is employed in medical diagnostics, drug discovery, and personalized medicine.

Finance: Used for fraud detection, algorithmic trading, and customer service.

AI has the potential to bring about significant advancements, but ethical considerations and responsible development are essential to ensure its positive impact on society.

Gmail News

Here are some Gmail tips:

1. Use keyboard shortcuts to navigate your inbox more efficiently.

2. Organize your emails with labels and filters to keep your inbox tidy.

3. Take advantage of the "Undo Send" feature to retract an email right after sending it.

4. Enable two-factor authentication for added security.

5. Use the "Canned Responses" feature to save and reuse email templates for repetitive messages.

Men's suits are a classic and versatile form of formal or semi-formal attire. They typically consist of a matching jacket and trousers, often accompanied by a dress shirt, tie, and sometimes a vest or waistcoat. Here are key elements and considerations for men's suits:

Jacket:

Lapel Styles: Suits can have different lapel styles, such as notch lapels, peak lapels, or shawl lapels.

Buttons: Single-breasted and double-breasted are common styles, with variations in the number and placement of buttons.

Trousers:

Pleats: Trousers may have pleats (folds) or a flat front, depending on the style and personal preference.

Cuffs: The bottom of the trousers may or may not have cuffs, and the decision often depends on personal style.

Shirt:

Color: White or light-colored dress shirts are common, but patterned shirts can also be worn depending on the formality of the occasion.

Collar Styles: Spread, point, and button-down collars are popular choices.

Cuff Styles: French cuffs (double cuffs) or barrel cuffs are common options.

Tie:

Color and Pattern: Ties add a splash of color and can be solid, striped, or patterned, complementing the shirt and suit.

Knot Styles: Common knots include the four-in-hand, half-Windsor, and full-Windsor.

Vest or Waistcoat:

Optional: A vest or waistcoat is optional but can add a touch of formality and style to the ensemble.

Accessories:

Pocket Square: A pocket square in the jacket's breast pocket can be a stylish addition.

Cufflinks: If wearing French cuffs, cufflinks are often used.

Material:

Wool: Common for year-round wear.

Cotton: Suitable for warmer weather.

Linen: Lightweight and breathable, ideal for summer.

Fit:

Tailoring: A well-fitted suit is crucial. Tailoring ensures the jacket and trousers fit properly and enhance the wearer's silhouette.

Occasions:

Formal vs. Casual: Suits range from formal (e.g., business meetings, weddings) to more casual (e.g., business casual events).

Remember that the choice of suit depends on the occasion, personal style, and individual preferences. Tailoring is key to achieving a polished and professional look.

Google Docs, Sheets, Slides, Forms, and Sites.

Google Docs is a free, web-based word processor that allows users to create and edit documents, spreadsheets, and presentations online. It is part of the Google Docs Editors suite, which also includes Google Sheets, Google Slides, Google Drawings, Google Forms, Google Sites, and Google Keep. Google Docs is accessible from any device with an internet connection and a web browser. You can use Google Docs to create and collaborate on documents with your team. The platform allows you to share documents securely and in real-time, making it easy to work together on projects.

Here are some popular online shopping websites:

Amazon: Amazon is one of the largest online retailers in the world, offering a wide range of products and services, including books, electronics, clothing, and more. They also offer a variety of digital services, such as streaming movies and TV shows, music, and cloud storage.

eBay: eBay is an online marketplace that allows individuals and businesses to buy and sell goods and services. You can find a wide range of products on eBay, including electronics, clothing, collectibles, and more.

Walmart: Walmart is a multinational retail corporation that operates a chain of hypermarkets, discount department stores, and grocery stores. They offer a wide range of products, including groceries, electronics, clothing, and more.

Etsy: Etsy is an online marketplace that specializes in handmade, vintage, and unique items. You can find a wide range of products on Etsy, including jewelry, clothing, home decor, and more.

Best Buy: Best Buy is a multinational consumer electronics retailer that offers a wide range of products, including computers, appliances, cameras, and more.

Target: Target is a retail corporation that operates a chain of department stores in the United States. They offer a wide range of products, including groceries, electronics, clothing, and more.

Home Depot: Home Depot is a home improvement retailer that offers a wide range of products, including tools, appliances, and building materials.

Kohl’s: Kohl’s is a retail corporation that operates a chain of department stores in the United States. They offer a wide range of products, including clothing, jewelry, and home decor.

Pixel 8 Pro, Pixel 8, Pixel 7a, Pixel Fold, Pixel Tablet, Pixel Watch 2, and Pixel Buds Pro are in the video.

Google Shopping News

Google Shopping is a service provided by Google that allows you to search for, compare, and shop for physical products across different retailers who have paid to advertise their products. You can browse by categories such as electronics, home decor, kitchen & dining, and toys, or search by keywords such as Meta Quest 3, Badland 69229, or Apple iPhone 15 Pro. Google Shopping results show up as thumbnail images that display each product’s retailer and price.

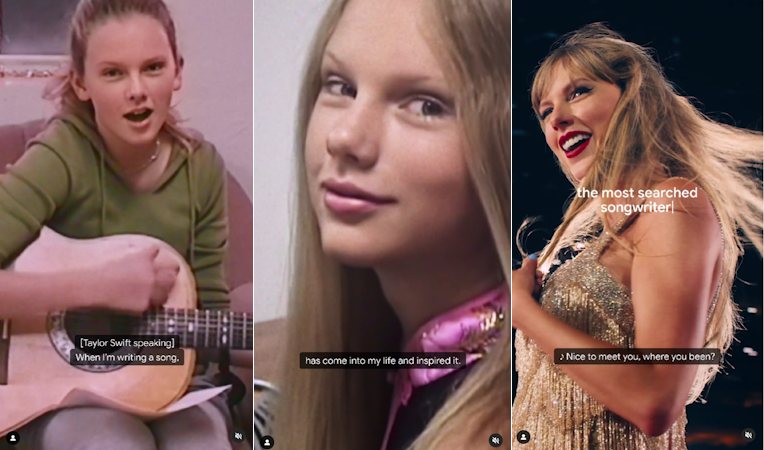

Taylor Swift is an American singer-songwriter who has won numerous awards for her music, including 11 Grammy Awards. She was born on December 13, 1989, in West Reading, Pennsylvania, and began her music career at the age of 14. Swift has released several albums, including “Fearless,” “Speak Now,” “Red,” “1989,” “Reputation,” and “Lover”. Her music is known for its catchy melodies and relatable lyrics, which often focus on love, heartbreak, and personal growth. Swift has also acted in several movies and TV shows, including “Valentine’s Day,” “The Giver,” and “Cats”.

According to Forbes, Taylor Swift’s net worth is estimated to be $1.1 billion as of October 2023. This makes her the fifth richest woman in the United States. Her fortune includes more than $500 million in estimated wealth amassed from royalties and touring, plus a music catalog worth $500 million and some $125 million in real estate.

Taylor Swift fans are called Swifties. The name is a cute play on the singer’s last name and was reportedly thought up by a fan. Taylor Swift has one of the biggest fandoms in the world, with 1 in 3 people worldwide being her fans.

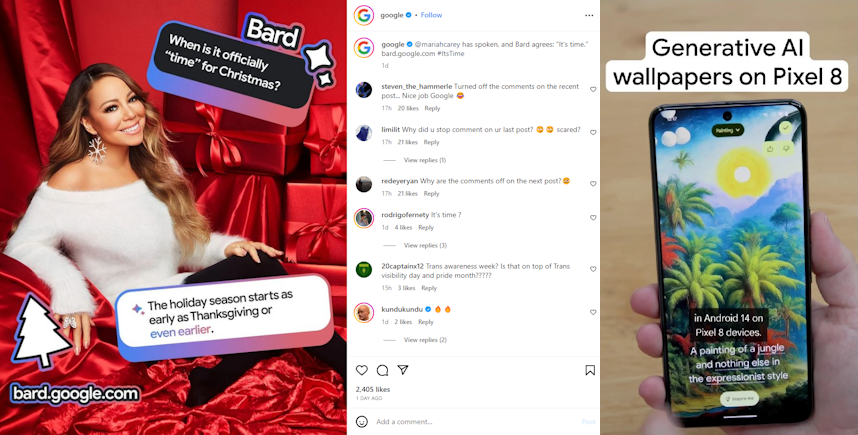

@google: @mariahcarey (Mariah Carey) has spoken, and Bard agrees: “It’s time.” bard.google.com #ItsTime. @mariahcarey: The holiday season starts as early as Thanksgiving or even earlier. @google: How to use generative AI (Artificial Intelligence) wallpapers on Pixel 8 in #Android14 #Pixel8 #AI #Google.

@xrick.c137x: All I Want for Christmas is you. @kajal_nayar_vlogs (Kajal Nayar Vlogs): So Very nice and great sharing.

Google Translate News

Google Translate is a free multilingual machine translation service developed by Google that can instantly translate words, phrases, and web pages between English and over 100 other languages. It offers a website interface, a mobile app for Android and iOS, as well as an API that helps developers build browser extensions and software applications. The service uses machine learning technology to provide translations that are more accurate and natural-sounding than previous translation technologies.

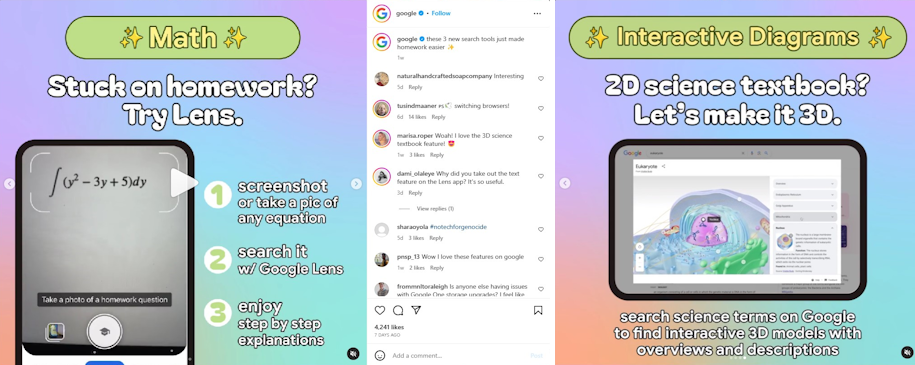

@google: These 3 new search tools just made homework easier. Math - Stuck on homework? Try Lens. 1- screenshot or take a pic of any equation 2- search it with Google Lens 3- enjoy step by step explanations. Science - Search science terms on Google to find interactive 3D models with overviews and descriptions. Physics - Word problem? No problem. @marisa.roper (Marisa Rope): Woah! I love the 3D science textbook feature!

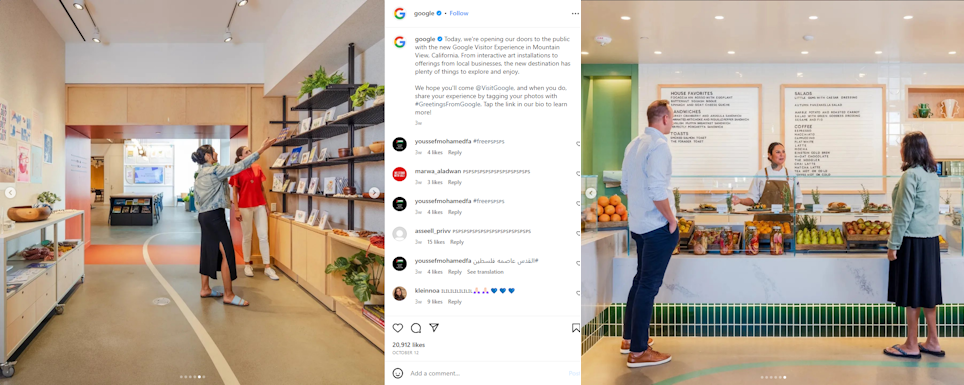

@google: Today, we're opening our doors to the public with the new Google Visitor Experience in Mountain View, California. From interactive art installations to offerings from local businesses, the new destination has plenty of things to explore and enjoy.

We hope you'll come @VisitGoogle, and when you do, share your experience by tagging your photos with #GreetingsFromGoogle. Tap the link in our bio to learn more!

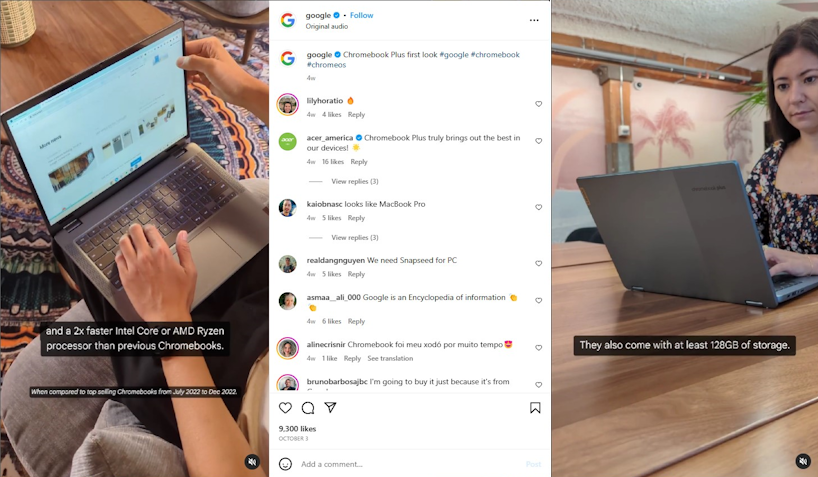

@google: Chromebook Plus first look #google #chromebook #chromeos. @acer_america (Acer America): Chromebook Plus truly brings out the best in our devices! @kaiobnasc: looks like MacBook Pro.

Google Special Logos.

Google New York Office.